Tuesday, June 19, 2012

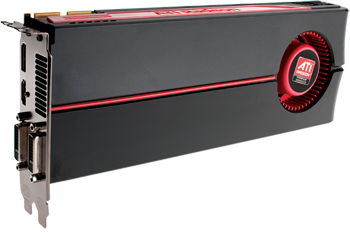

Performance Of Intel Core i5 3470: HD 2500 Graphics Tested

Posted by Brad Wasson in "Digital Home Talk" @ 11:00 AM

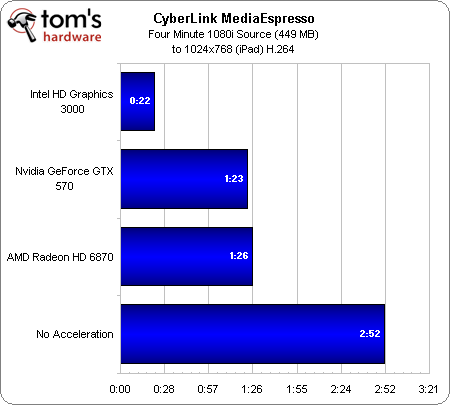

"Intel's Core i5-3470 is a good base for a system equipped with a discrete GPU. You don't get the heavily threaded performance of the quad-core, eight-thread Core i7 but you're also saving nearly $100. For a gaming machine or anything else that's not going to be doing a lot of thread heavy work (e.g. non-QuickSync video transcode, offline 3D rendering, etc...) the 3470 is definitely good enough."

Many of our readers are interested in detailed specifications and performance analyses of CPUs and GPUs. There are few better than AnandTech to conduct a through analysis, as they have done for this new Intel Core i5 chip. Their conclusion? It will work fine for you if your computing requirements are limited to activities like video transcoding, but if you are a game player you will need to look at some of their other chips to get satisfactory performance.